Company

IR

Fast AI Inference Engine “SoftNeuro®”

"SoftNeuro®" operates in multiple environments, utilizing learning results that have been obtained through a variety of deep learning frameworks.

During this roundtable, the development team discussed the development history and possible future directions.

SoftNeuro®

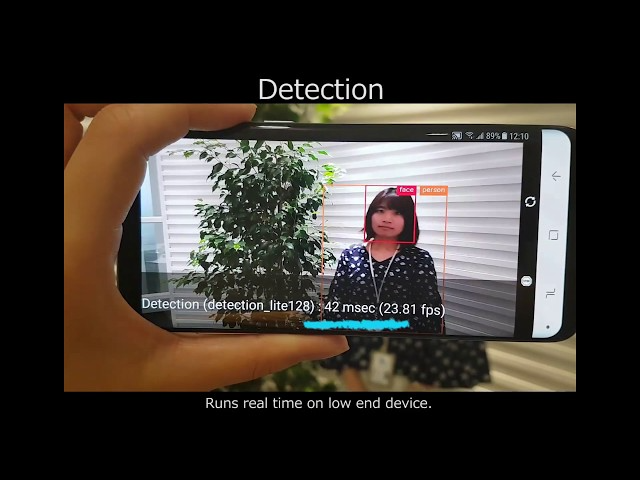

Fast AI Inference Engine

“SoftNeuro®” is one of the world’s fastest deep learning inference engines. It operates in multiple environments, utilizing learning results that have been obtained through a variety of deep learning frameworks.