Company

IR

Fast AI Inference Engine

“SoftNeuro®” is one of the world’s fastest deep learning inference engines. It operates in multiple environments, utilizing learning results that have been obtained through a variety of deep learning frameworks.

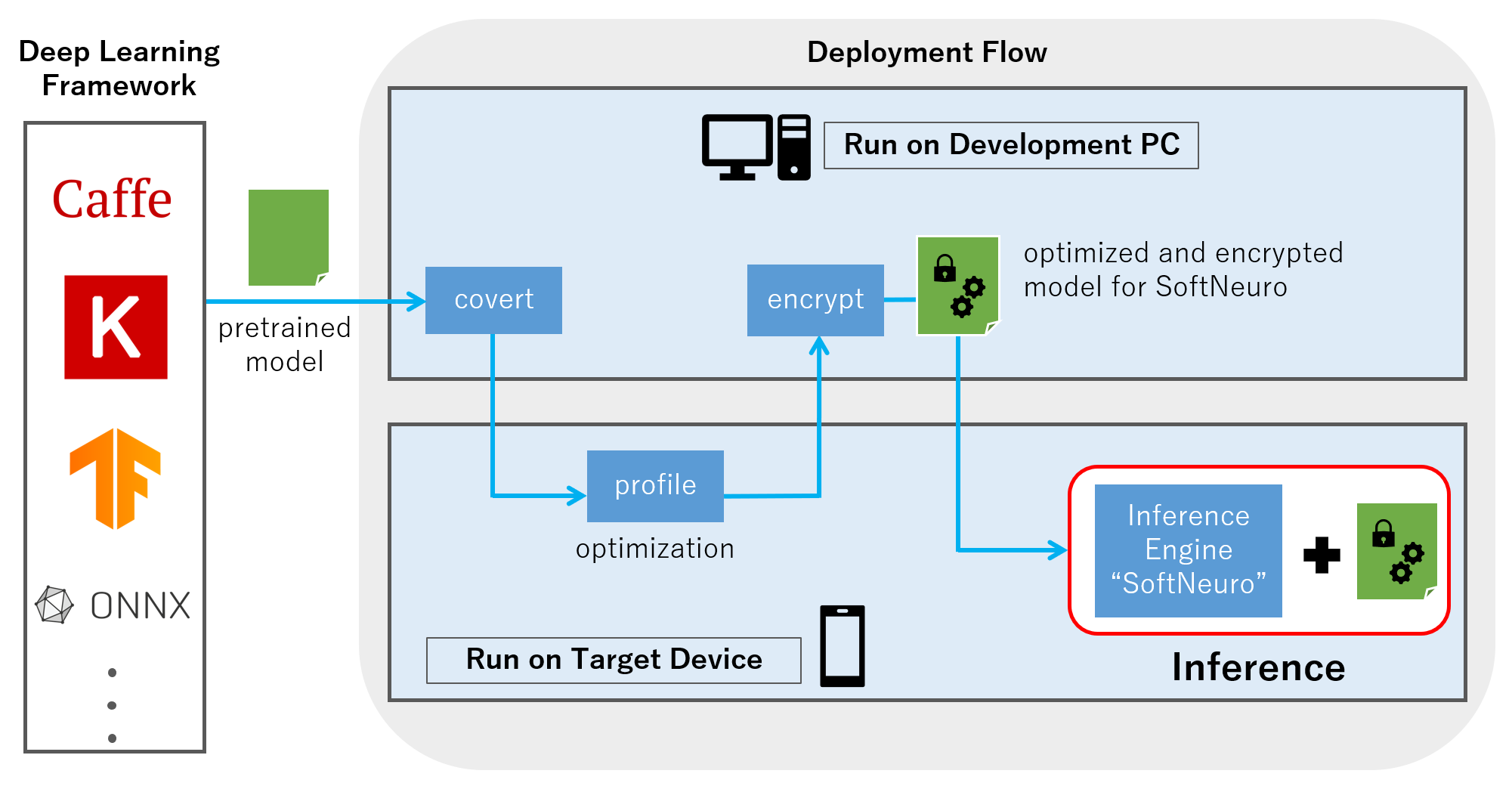

“SoftNeuro®” is one of the world’s fastest (*1) deep learning inference engines. It operates in multiple environments, utilizing learning results that have been obtained through a variety of deep learning frameworks. (Fig.1) Moreover, “SoftNeuro®” is not limited to image recognition. It is a general-purpose inference engine that can be used for text analysis, voice recognition, image recognition, etc.

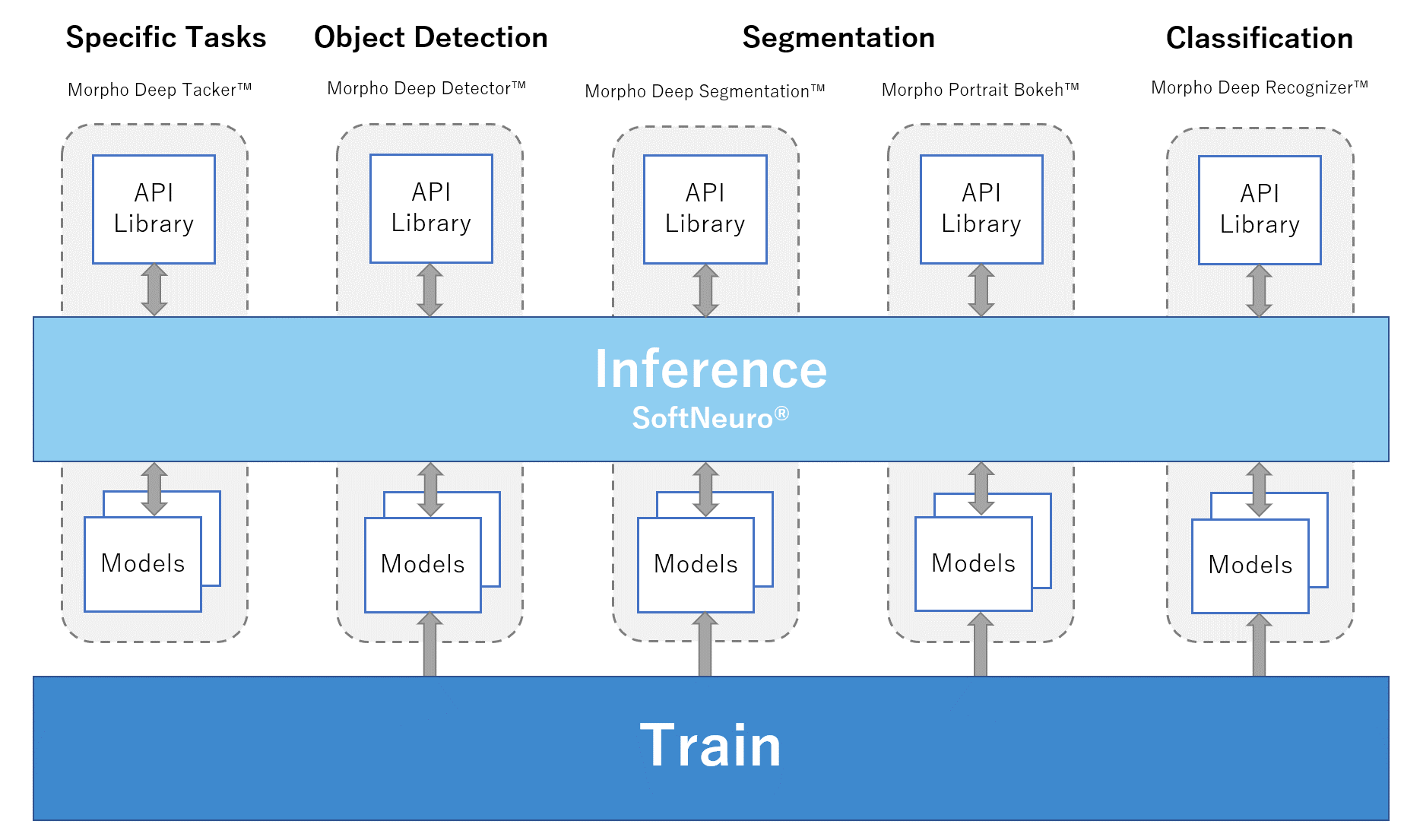

Morpho not only licenses “SoftNeuro®” as a standalone inference engine, but also brings about a large increase in the processing speed of their existing products by embedding “SoftNeuro®” into them. An example is “Morpho Deep Recognizer™”, which is Morpho’s image recognition engine.

1.One of the fastest in the world (According to Morpho’s research)

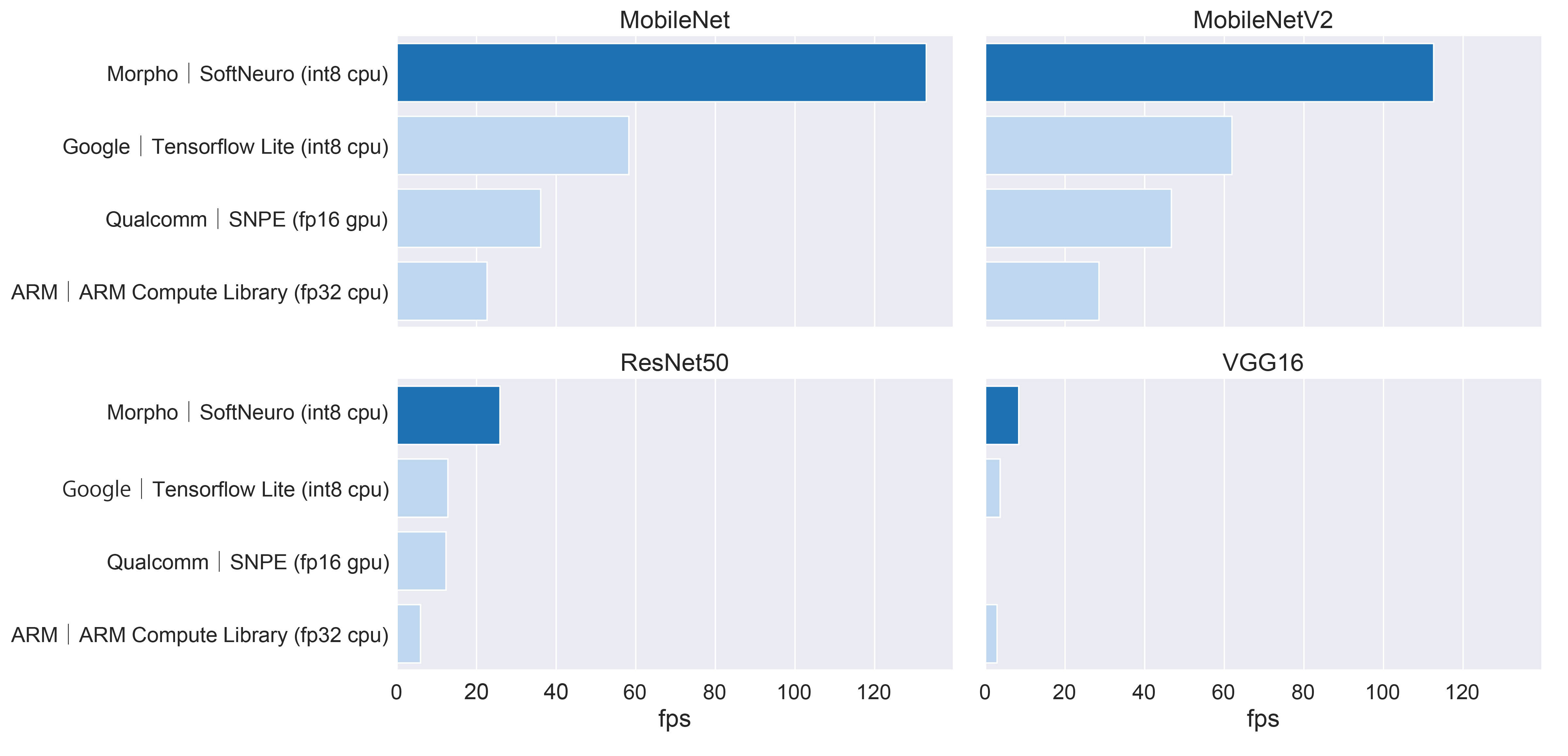

“SoftNeuro®” is faster than or as equally fast as the mainstream inference engines – when run on CPUs – while providing a set of key features as described later. This high-speed performance has been achieved through a variety of optimizations (neural network, memory usage and others) for each platform. Please refer to *1 for details.

2.Supports multiple frameworks

“SoftNeuro®” achieves fast processing by utilizing the learning results of these major frameworks (Fig.1). It is possible to achieve fast inference processing and multi-platform compatibility without discarding the learning assets that users have built up so far. Further, the compatibility of “SoftNeuro” with frameworks and layers will be expanded sequentially.

3.Multi-platform compatibility

“SoftNeuro®” is scheduled to be applied to a variety of platforms, and appropriate optimization (CPU speed-up instructions, use of GPU, DSP and other specialized hardware) for each platform will be performed.

Multi-platform compatibility facilitates deploying the learning results on a wide range of platforms. It also provides flexibility to quickly respond to changes in platforms and hardware trends.

4.Compatible with secure file formats

“SoftNeuro®” is capable of encrypting the trained networks, minimizing the risk of leaking the machine learning know-how and the learned model parameters.

*1:One of the Fastest in the world (According to Morpho’s research)

We compared the main inference engines that are publicly available. The measurement of the inference processing speed is performed on the CPU environment, comparing the main inference engines that are available to us.

The inference processing speed measurement results are shown in Fig. 2.

●Figure 1. Integrating “SoftNeuro” into Machine Learning Systems

●Figure 2. Comparison of Inference Speeds (CPU: ARM)

Measured on: Qualcomm Snapdragon 855 (Image size used for inference : 224×224 pixels)

●Relations Among AI Products

Vision Product of the Year Awards 2018